Using Nokogiri to Scrape Web Pages in Ruby

This past week I had to create the first project for the coding bootcamp I’m in(flatiron). We were tasked to create a CLI gem in ruby that would scrape a web page(or an API, but in my case I used a web page) 2 layers deep. I would be using Nokogiri to scrape the web page and at first the idea alone was daunting. How will I do any of that?

I had used the Nokogiri gem in some of our labs, but I didn’t really understand how it worked at all. What I didn’t realize was, I didn’t necessarily need to understand how it worked, rather, I needed to understand how to use it! It’s like many other appliances and tools we use in our daily lives. In order to use a stove to cook food, for instance, you don’t need to understand how each individual component of the stove is working, only how to operate it.

So the first step to using Nokogiri is installing the gem, so you need to

require 'nokogiri'

require 'open-uri'

If you haven’t ever worked with Nokogiri you also have to enter bundle install in your terminal, and be prepared to wait because it takes a long time to install nokogiri. You won’t have to install open-uri because it comes shipped with ruby. After that you’re ready to start using Nokogiri to parse the HTML of a web page! To do that you will define a variable, such as doc equal to the parsed HTML files, so like this

doc = Nokogiri::HTML(open([URL of the web page you want to scrape]))What’s happening here is open is a function of open-uri that, from my understanding, is gaining access to a web page’s html. Then Nokogiri is turning that html into a nested node set (something similar to a hash of all of the html elements) for you to work with! You could perform these steps separately as well, by assigning a variable to the html, then passing that variable into Nokogiri, like so

html = open([URL of web page you want to scrape])

doc = Nokogiri::HTML(html)So for my project this was the website that I scraped https://animalcrossing.fandom.com/wiki/Villager_list_(New_Horizons)

In order to start scraping it I had

BASE_URL = "https://animalcrossing.fandom.com/wiki/Villager_list_(New_Horizons)"doc = Nokogiri::HTML(open(BASE_URL))

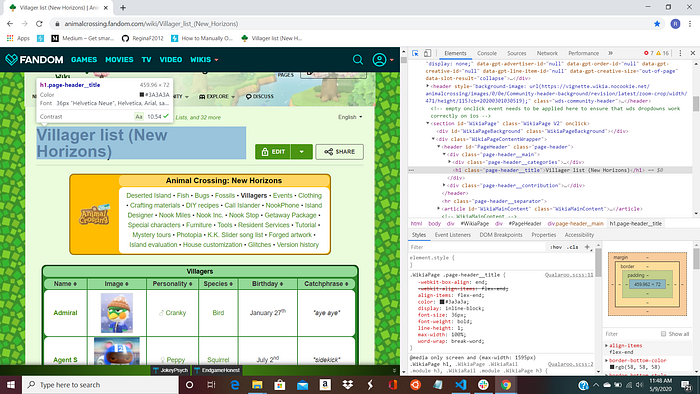

Then let’s say I wanted to scrape the header from this page. In Google Chrome I would inspect the html element of the header by pressing ctrl+shift+i (I believe that’s Command+Option+i on mac) and then hover over the header. Which would look like this:

You can see the header is highlighted and the text that says “h1.page-header__title” and you can also see that off to the right side what is highlighted is <h1 class = “page-header__title”>. (Note, the . before page-header__title indicates that it is the value of the class. As you see off to the side how it says “class =”) Sweet, that’s what I need! So to scrape that you use .css on your variable of the nested node set ( doc )

BASE_URL = "https://animalcrossing.fandom.com/wiki/Villager_list_(New_Horizons)"doc = Nokogiri::HTML(open(BASE_URL))header = doc.css("h1.page-header__title")

However, the value of header would look like this

[#<Nokogiri::XML::Element:0x2b14c7af9c98 name="h1" attributes=[#<Nokogiri::XML::Attr:0x2b14c7af9c20 name="class" value="page-header__title">] children=[#<Nokogiri::XML::Text:0x2b14c7af97d4 "Villager list (New Horizons)">]>]and I just want the text. You can see the part that says

children=[#<Nokogiri::XML::Text:0x2b14c7af97d4 “Villager list (New Horizons)”>]Hmm… I wonder if there is any way to get that text? Like most things in ruby, there are several ways to accomplish this. Luckily, one of those ways is super simple.

header = doc.css("h1.page-header__title").textNow header is equal to the string"Villager list (New Horizons)"! Perfection!

Depending on the web page used, and the information you want to scrape from it, determines what methods you are going to use, and how easy it is going to be. However, we can see that using Nokogiri gives us something to apply these methods to, so we can parse the desired information from the html of the web page.

After I started viewing Nokogiri as a tool, it became much less intimidating to use. I found scraping the information from the web page to be quite fun, in the end. Even though I said that I don’t need to understand the working parts of Nokogiri in order to use it, it would be awesome to learn more about how it works. My biggest takeaway from this project is that you don’t have to reinvent the wheel, and gems like Nokogiri are amazing tools that when implemented correctly, allow us to do complex things in our code effortlessly.